Cre·ate (verb) is a participatory video installation that invites the audience to create their own butterfly that follows the path of their hands using their hands. Regardless of background, anyone can create a butterfly of their own that flies along a distinctive path they defined.

Oct 20 - Nov 1, 2022

(2 weeks)

Blender

Mediapipe

OpenCV

Python

Inspiration

As AI based art generation tools like Dall-E become more accessible to the public, I started to wonder what it means to create. What makes one to be the author of the creation? If an audience prompted the software like Dall-E, which is developed by another person, and the software generates the artwork, does this work created by the audience or a developer or the software? Through this project, I wanted to explore what makes creation belong to an individual and how much of an authorship do the viewers take into consideration when seeing a collection of generated creations.

Process

Since creations are often made with hands, I decided to capture the movement of the creator’s hand during the act of creation. I also decided to make this an interactive audience participatory project instead of a project focusing on an artisan’s hands to challenge the general public’s misbelief in art making that only artists can make art.

For the object the users would be generating with a simple gesture, I created a

butterfly mesh with a mirrored wing flipping animation in Blender. I used

color ramp in shader editor so that each generation would give a different color of butterfly.

I randomized the color with intention of giving a bit of diversity in the generated butterflies, but now that I’m looking back, perhaps I should have the butterflies to take the creator’s visual information like hand image as part of the generation so that each butterfly can be more customized to the creator.

Why Butterfly?

I chose butterfly as the main object because creation starts with one’s idea. Butterflies symbolize metamorphosis or soul/mind in many cultures, which fits well to this project’s exploration of capturing the act of creation (bringing an idea to the physical space).

I placed a pedestal with a webcam in the center of the room facing the projected wall. The user can move their hand as much as they want under the webcam, and when they’re ready to create a new butterfly, all they have to do is make a fist then open it as if they’re showing something.

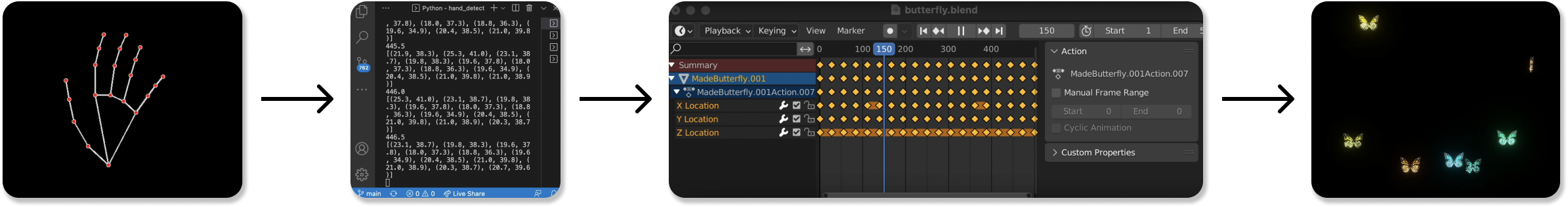

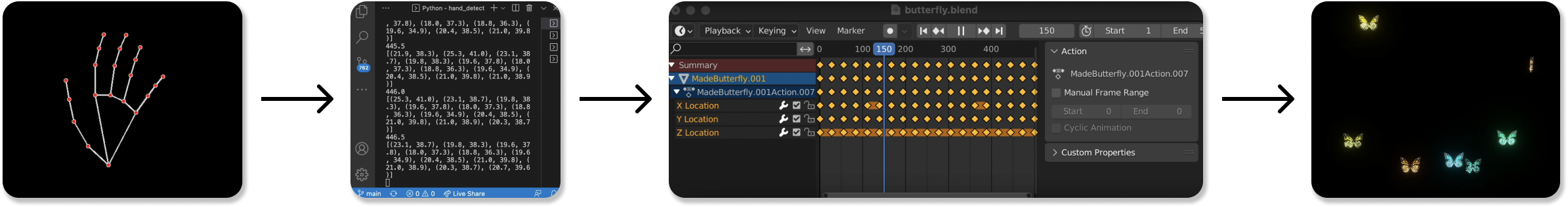

This developing view of the interaction above shows how a new butterfly mesh is generated and added to the animation upon the detection of the fist open motion.

Capture System Pipeline

When the audience is ready to create a new butterfly that would be following their hand trace, they would close their fingers to make a fist then open up the hand to release the butterfly. The path a new butterfly follows would be determined by the trace of audience’s thumb root joint. Upon the audience opening their fist, the path data would be written to a file, which would then be read by Blender python at each frame to generate a new butterfly with an animation key points of the hand path locations. I limited the path list length so that Blender won’t freeze importing and creating large number of frame key points.

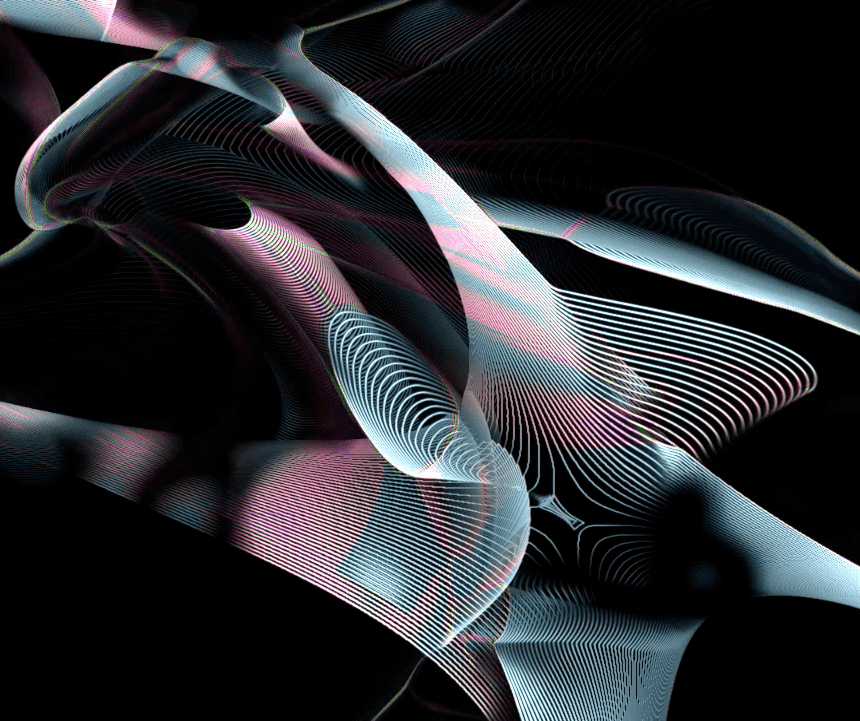

Installation Views

Reflection

Despite my initial prediction that the viewers would be able to easily identify the butterfly they created based on the trace they drew by moving their hand, I realized this wasn’t the case especially if there are more butterflies flying around on screen.

As I mentioned earlier, I wish I made the function to generate butterflies with more customization so that anyone can easily find their creation while also appreciating the overall harmony of their work being part of those of many others. Even though this project was a great opportunity for me to learn and explore mediapipe and hand tracking using open cv, I also realized I could’ve utilized the hand tracking data more than just gather a past locations of a specific point of the hand.

If I work on this project again, I would also take the data of how people move their hands in addition to where they move their hands. I think exploring how would bring more interesting insights to people in time, as different people move their bodies in a different manner even if they’re prompted to do the same movement.